Swift CG+ User Manual

The Swift CG + manual is the operators reference manual that details all the features, functionality and tools accessible in your version of Swift CG +. Some examples are given where appropriate to clarify use but to see more in-depth, training-related material on creative operational usage, techniques and workflows please refer to the appropriate training materials and tutorials

Reference Manual

| About | Description |

| Revision | 4.6_4 |

| History | For 4.6_1 to 4.6_4 versions |

| Authors | Jason Wood; Justin Avery |

Overview

About this manual

The Swift CG + manual is the operators reference manual that details all the features, functionality and tools accessible in your version of Swift CG +. Some examples are given where appropriate to clarify use but to see more in-depth, training-related material on creative operational usage, techniques and workflows please refer to the appropriate training materials and tutorials.

About Swift CG +

Swift CG + provides all of the tools required to create all graphics that can be ran through Swift. This includes

- Template graphics, which can be driven via Playout, or controlled remotely using Swift Live and a suitable Custom Control application

- Tactic Telestration graphics

- Rich touchscreen-driven graphics applications

- Graphics for use on systems that have an embedded dll.

- Swift CG + is used before transmission to produce the graphics that will be ran live.

Swift CG + has evolved around the premise that designers will originate graphics with traditional content creation applications – such as those from Adobe and Autodesk – and a Swift Live operator will then import them as “assets”. The imported assets are then used as components in the template creation process. The Swift CG + application provides the toolset that facilitates the templatisation of graphical content.

Swift CG + scripts and projects

All Swift CG+ graphics sit inside a project. A project contains all of the assets required by the graphics that are in it – Examples of assets are fonts, geometries and images.

Graphics are stored on disk as a Ruby Script, with the .rb extension. Ruby is a scripting language, details are available at https://www.ruby-lang.org. Due to this, it is quite common for the words “Graphic” and “Script” to be used interchangeably. They refer to the same thing.

The graphic file contains :

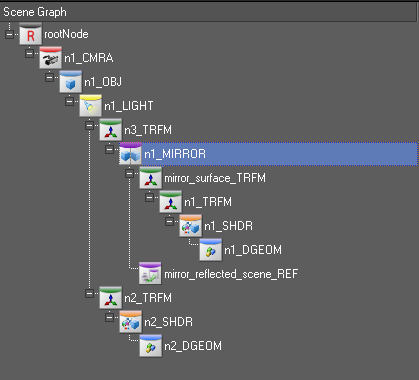

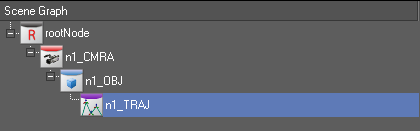

- Information on the scenegraph – the hierarchy of graphical elements that are required to render the graphic.

- References to assets, such as bitmaps, fonts and geometries

- Methods, which contain instructions on how to animate the graphic, which external data should be used to populate the graphic.

The Swift CG + gui

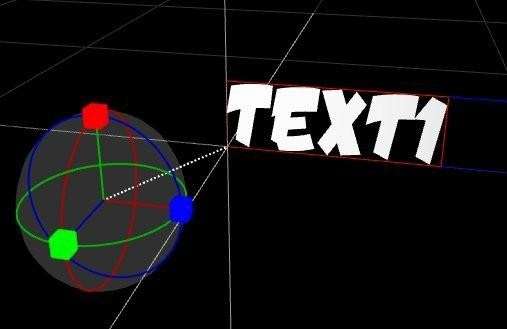

Swift CG +’s Graphical User interface borrows much from traditional content creation applications. Incorporating a 3d viewport with virtual camera paradigm will be familiar to all those working with any traditional 3d application. The scrubbable timeline, key frameable graphics, curves and scenegraph editors that enable flexible node-based editing, (adopting the standard parent-child hierarchy) will also be familiar to experienced editors, motion graphic designers and compositors.

The GUI also provides all the drag and drop tools that allow the user to establish MOS inputs, connections to database information, user code extensions, scripting and the capability of generating external interfaces specific to any given projects’ requirements that all IT specialists will be accustomed to.

Command line arguments

There are a number of command line arguments with which you can start Swift to override the default preference settings.

| Command Line Argument | Description |

| –help | Show a help message listing available command line arguments |

| –version | Show the current version of Swift and exit |

| –prefs <file> | Load the specified preferences file instead of the usual preferences file. |

| –project <file> | Load the specified project instead of the project in preferences |

| –customProject <file> | Load the specified project instead of the custom project in preferences |

| –noProject | Do not load the project specified in preferences |

| –noCustomProject | Do not load the custom project specified in preferences |

| –cue <port> | Set the port for a serial cue device |

| –run <runMode> | Sets the mode that Swift will run up in, overriding the

mode set in preferences. Swift must be licensed for the mode specified for this to work. Current available modes are playout, live, edit, and sports |

| –preview | Runs up Swift as a preview renderer |

| –diags <true/false> | Show or hide the diagnostics window in live mode. |

| –remote <mosid> <ncsid> <encoding> <timeout> <upperport>

<lowerport> |

Turn on remote mos control |

| –cache | Enable caching of scripts when running in the remote mos control mode |

| –custom <addr> <port> | custom plugin protocol control (use addr=none

for server) |

| –render <addr><port> | Swift->Swift client/server (use addr=none for server) |

| –plugin <dir> | Specify a directory to load plugins from |

| –sound <true/false> | Enable sound |

| –qscreen <0/1> | dual Quadro SDI o/p |

| –locale <locale> | Choose a locale |

| –analyser | enable parallel port for logic analyser |

| –dvsCard <#num> | dvs video card number |

| –systemDir <dir> | override the Swift system directory |

| –messagesDirectory <dir> | directory for offline render messages |

| –controlURL <url> | control machine for remote scenegraph interaction |

| –layerRouting | Enables layer routing. See the layer node reference for more details. |

| –SDI2 | Enables a second set of SDI output channels, if available |

| –mux | Turns on Foreground/background alpha-muxing, compatible with Ultimatte external mixing. |

| –vr <vr_type> <vr_file> | Allows you to specify the VR tracking type and calibration file from the command line. |

| –videoDisplayRender (true/false) | Turns on or off the monitor render display |

| –punditInterface <punditDir> | Specify the name of the pundit .pro file from the command line. |

| –skinDirectory <skinDir> | Specify the Swift sports pundit skin directory from the command line. |

| –lws <web_directory> <port> | Setup the Swift web server from the command line. |

Project Menu

Overview

Swift holds all of the assets and graphics required to run a show in a set of directories called a project.

The Project directory (shown to the right) contains a set of sub-directories. When creating a project, the user will choose the name of the Project directory and a project file will automatically be created with the same name, inside the Project directory.

The project is laid out under two directories:

- GMScript

- Templates

- Lib

- Stacks

- Save

- Backups

- GMData

- CGProgram

- Extruders

- FBX

- Fonts

- Geometry

- Images

- LineStyles

- Maps

- Materials

- Paths

- Plugins

- Shaders

- Skeleton

- Sounds

- StaticMaps

- Textures

- VR

GMScript holds the graphics files, and other files relating to the live playout of the graphics. GMData holds the assets that are used by the graphics.

The subdirectories and directories are automatically created and named by Swift and should not be renamed under most circumstances.

The project options are accessible via the Project menu or via the project toolbar.

New Project

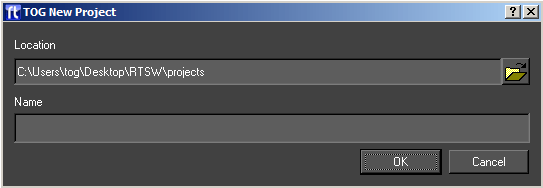

To create a new project, click on the pull down menu “Project” and select “New” or click the new icon on the project toolbar. This will popup the New Project dialog:

Select a suitable location and name for the project and press OK. A new project and all related subdirectories will automatically be created. The project directory and profile file (.prj) will have the name specified.

Open

Select Open from the project drop down or toolbar to open an existing project. This will pop up the standard file chooser. Navigate to the required project directory and select the associated .prj file. Once selected, any open project will be closed and the selected project will load into Swift.

Save

Select Save from the project drop down or toolbar to save your currently open project. The user will be prompted to save the current graphic and modified shaders.

Save As

Select Save As from the project drop down or toolbar to save the current project to another location. The user is prompted for a new project file name and directory. The project will then be saved to the new directory using the name provided.

Recently Opened

Selecting this from the project drop down will display a list of recently opened projects.

Selecting an item from the list will have the same effect as browsing for and opening a project via the Project -> Open menu option.

Close

Selecting Close from the project drop down or toolbar will close the currently opened project. The user will be prompted whether or not to save any changes which have been made to the project.

Project Settings

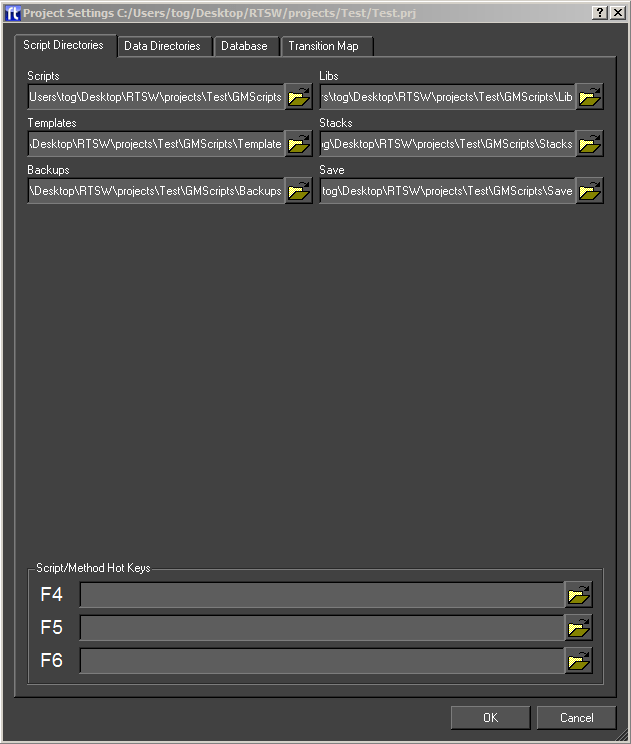

Selecting Project Settings from the project drop down or toolbar will pop up the Project settings dialog.

This shows the name and path of the currently loaded project, and the following tabs allow settings to be changed

Script Directories

This tab shows the directory paths inside of this project. It is possible to point these directories to new locations from this tab. However, this is very rarely needed.

- Scripts – This is the top directory that contains the following:

- Templates – These are the main graphics that are run from playout, live or sports

- Libraries – These are reusable drop-in modules that may be used in templates.

- Stacks – This is the location of graphic sequences saved from playout or sports.

- Backups – This is the location of auto and manual backups made during edit.

- Saved Scripts – This is not used.

Hotkeys

In playout, it is possible to trigger graphics by using hotkeys. Up to three hotkeys can be created, on the function keys F4, F5 and F6

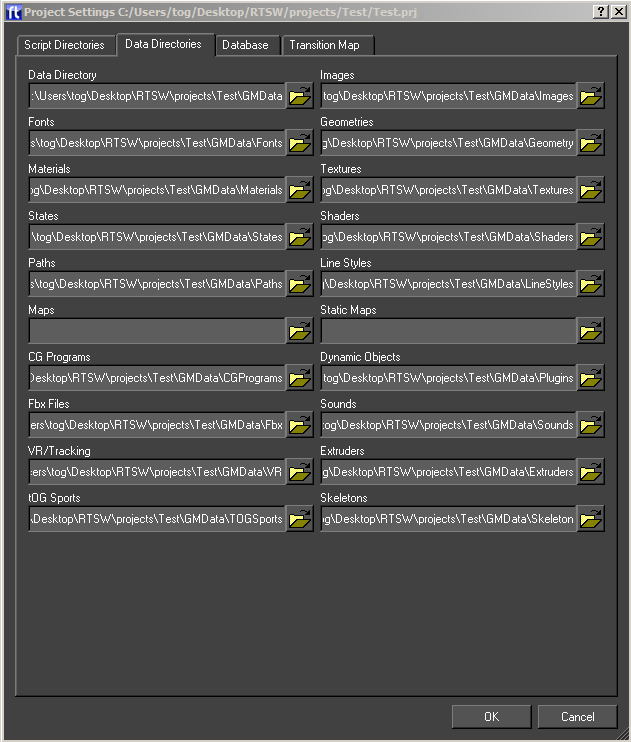

Data Directories

The Data Directory tab allows the user to specify locations project assets.

- Images

- Fonts

- Geometries

- Materials

- Textures

- States

- Shaders

- Paths

- Line Styles

- Maps

- Static Maps

- CG Programs

- Dynamic Objects

- Fbx files

- Sounds

- VR/Mix/Tracking Data

- Extruders

- Tog Sports

- Skeletons

To change the current location, click on the file chooser button, navigate to the new location and press OK.

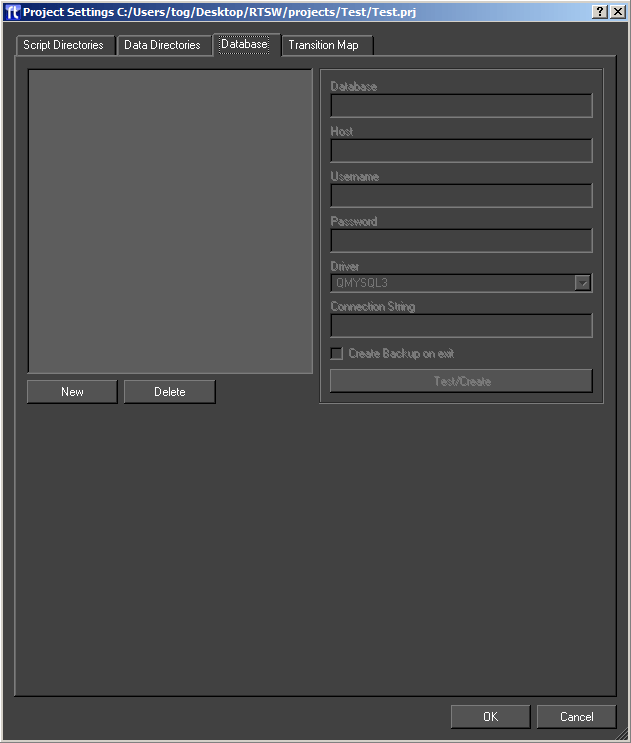

Database

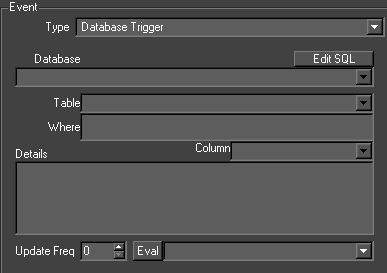

The Database tab allows the user to specify databases for this project.

Databases are one means of providing external data to a graphic. See the section on Inputs under timeline. Swift has built in support for mySQL and any database that provides an ODBC bridge.

To create a new database click New and fill in the details. Remember to type return after each entry. Once the database is created click Test/Create to test the connection. If the database does not exist then you will be prompted to create it.

Clicking Delete will remove the database from the project. It will not drop the database from the database server.

| Option | Description |

| Database | The name of the database. |

| Host | Type the Host name of the database server in this field. This can be a URL or IP address. |

| Username | The username required to connect to the database server |

| Password | The password required to connect to the database server |

| Driver | Choose the driver that is correct for the database server. For Mysql, choose QMYSQL3, for ODBC database, choose QODBC3 |

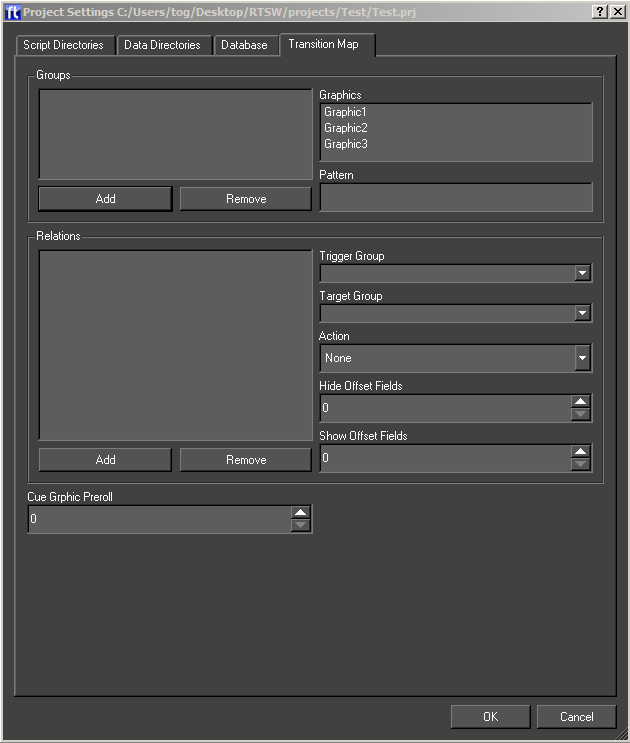

Transition Map

When running graphics in a live environment, it is a common requirement to have some level of automation over which graphics are on air, and which ones are not.

As a simple example, imagine that you have a Lower Third graphic, and a Full Form graphic. Having both on the screen at once would cause the graphics to overlap, so you want to make sure that both cannot be on screen at the same time.

You could do this manually, by calling the appropriate methods yourself, but this puts the workload onto the operator, who may make mistakes.

Transition Maps allow the logic of how graphics interact to be specified at design time.

NOTE: Changes to the transition map are only saved when Ok is pressed.

Groups

The transition map logic works as relationships between groups of graphics.

To add a group, click the Add button. To delete a group, click the Remove button.

A group can be anything that you wish. These would all be valid groups :

- Lower Third Strap

- Bug

- Full Frame Graphic

- Election Map

- Clock

- Stats Graphic

Graphics can be added to groups. A graphic can be in as many groups as is required. Taking the example groups as mentioned above, a clock graphic could be in both the Clock group, and the Lower Third Strap group. Similarly a hisSwiftram graphic might be in both the Stats graphic and the Full Frame Graphic groups.

The Graphics list shows a list of all graphics in the project, with graphics in the currently selected group appearing selected.

Ctrl+Click to select or deselect new graphics into the currently selected group.

Relations

Relations define actions that occur when a graphic is run in Swift. The type (action) of the relation will occur if :

- a graphic is currently on-screen from the source group.

- The graphic coming on is from the destination group.

The action will apply to all graphics that currently match these criteria.

The available actions are described in the following table:

| Action | Description |

| BringOn | Call the bringOn method of the graphic in the source group |

| TakeOff | Call the takeOff method of the graphic in the source group |

| HideShow | Call the hide method of the graphic in the source group when a graphic from the destination group leaves the screen. Once there are no graphics from the destination group on screen again, call the show method. |

Edit Menu

The Edit menu contains the controls for Undo/Redo, Preferences and configuration for External Devices.

Undo/Redo

Undo/Redo ia accessible via the Edit menu or via the toolbar. Swift supports undo/redo by saving the complete graphic before any operation that might alter it.

These undo/redo files are saved in the Backups directory of the project. The depth of undo/redo is only limited by disk space. The user can regress and progress through these files using the Undo and Redo tools on this menu.

Preferences

Overview

There are a range of parameters held within Swift which define its operational environment. Once specified, they do not usually change. They are accessible through the Preference Dialog under the Edit menu tab or the Edit toolbar. (You can also access these in Playout, Live and Sports). Note also that some of these parameters may be overridden through command line arguments (see page ).

Preferences are split up into the following sections:

| Tab | Description |

| Protocol | Communication protocols and cue devices. |

| Video | SD/HD Video in and out setup. |

| Audio | Audio pass through and output. |

| Style | Swift look and feel setup. |

| Locale | Where to look for things and defaults. |

| Annotations | Display and interaction setup. |

| Run Mode | Run up mode and debugging. |

| Layoff | Render to disk setup. |

| Text Safe Markings | Text and picture safe setup. |

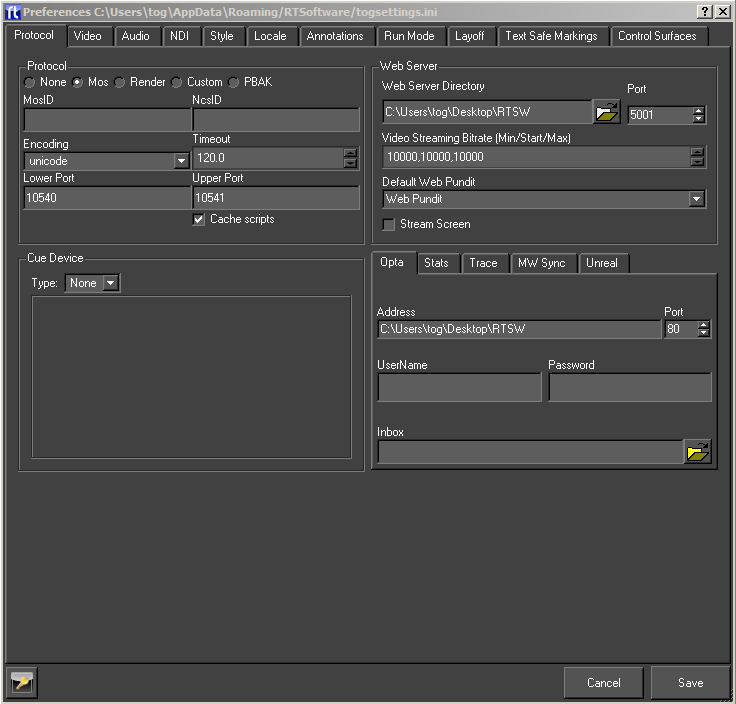

Protocol Tab

The protocol tab allows the setup of devices that control Swift, or that Swift can communicate with.

For more information on controlling Swift remotely, see the Swift Playout manual, and Swift Live manual.

Protocol

The Protocol section sets up a protocol that Swift will receive commands from.

None

Swift is not being controlled externally. This is the default

MOS

Swift can be controlled by sending MOS messages to it using sockets. This is mainly used for playing graphics and methods. The protocol is described in the Live manual.

| Parameter | Description |

| MosID | The identifier for the Swift system when running in Live mode. Included in all MOS messages sent between the systems. |

| NcsID | The identifier for the application controlling Swift remotely. Included in all MOS messages sent between the systems. |

| Encoding | The character set encoding of the messages, either unicode (utf8) or ascii. |

| Timeout | If a message is not received by Swift within this time the client application is disconnected. Usually, the systems should heartbeat each other more regularly than this timeout time. |

| Lower Port | The client application send commands on a socket connected to this port. |

| Upper Port | The client application reads status messages from a socket connected to this port. |

| Cache Scripts | If this is selected Swift will not clear out and destroy graphics after they are no longer needed. Swift keeps and reuses them. This speeds up loading. |

Render

Render mode in Swift allows the user to connect Swift to other Swifts. For more information, see the Playout documentation.

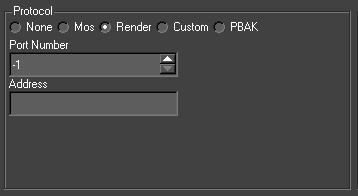

Custom

The Custom protocol tab allows a 3rd party to attach their own protocol layer.

This is achieved through the use of a shared object – either dso (linux) or dll (windows). This means that you can repurpose existing interfaces to drive Swift. See the Plugin manual for more info.

| Parameter | Description |

| Port Number | The port number for socket connections between the systems. |

| Address | The server address. This is only required by a client, it is blank for a server. |

Web Server

It is possible to communicate and control Swift over standard Web Protocols. For more information, see the Swift Web Api manual

| Parameters | Description |

| Web Server Directory | The Web Server Directory defines the root directory for the Swift web server. Any files in this directory or it’s subdirectories will be served up by Swift when requested via a HTTP request (for example, from a web browser) |

| Port | The port determines which port the Swift web server listens on. Setting the port to 0 disables the web server and the web APIs |

| Video Streaming Bitrate | Video streaming will adapt to the network constraints to provide the best experience possible. The bitrate will start at the Starting bitrate (the middle of the three values), and based on the performance of the network, the bitrate may grow up to but no more than the Maximum value, or shrink to no less than the Minimum bitrate. The bitrates are in kilobits/second. |

Cue Device

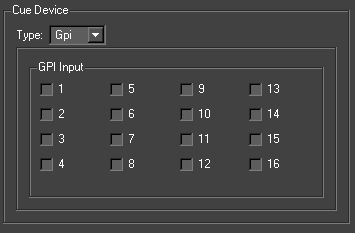

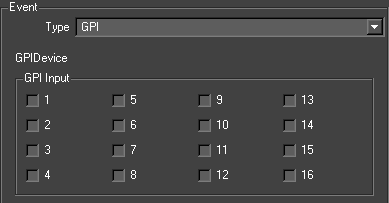

Swift graphics can contain cue points which allow sequences and animations to be paused. Cue devices provide a remote means of triggering cue points allowing the graphic to continue with the next animation block. Swift supports 3 device types, GPI, serial and Wii.

GPI

The gpi device consists of either 2 or 16 inputs depending on the device installed (see External Devices in the next section). Select the checkbox to assign which GPI channel triggers the cue.

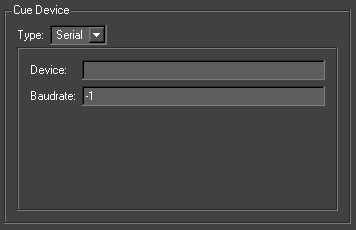

Serial

| Parameters | Description |

| Device | This defines the serial port address, It has the form /dev/ttyS0/1/2… under Linux and com0/1/2 under Windows |

| Device Baud Rate or Input Number | If the cue device is a serial port address then this will be the baud rate. |

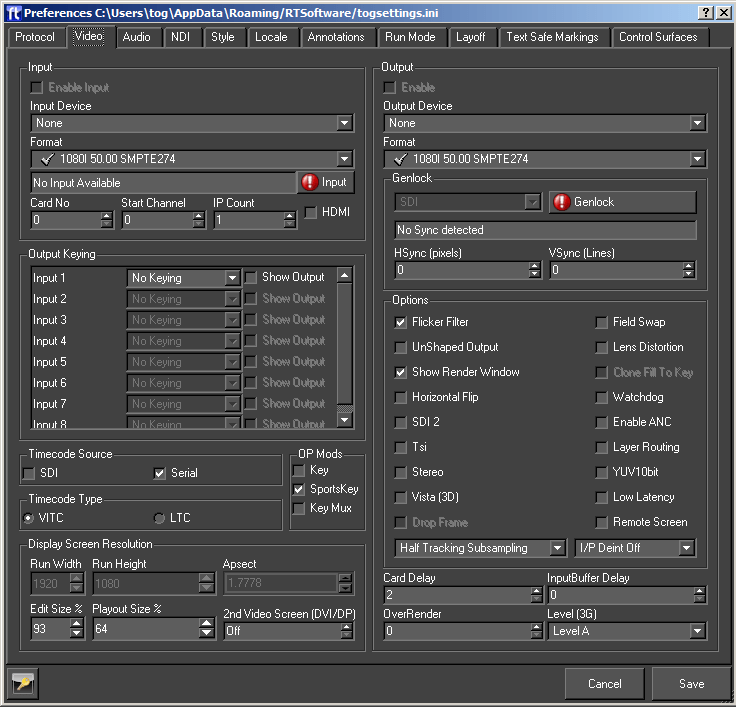

Video Tab

The Video tab allows a user to specify the video devices in use, formats, size, filtering, keying etc for broadcast SD/HD video in and out. Note that depending on what you change, you will have to restart Swift for it to take effect (you will be prompted when this is the case).

Output

Changes in this section require a restart of Swift to take effect.

| Parameter | Description |

| Enable Output | Enable the Video output. |

| Enable Stereo | Enables Stereo Output |

| GPU Direct | |

| Output Device | Select the required output device |

| Format | Select the video output format. |

When a video output device is chosen, certain options will automatically be selected (these are the default values for the device) and some will be greyed out (these are not supported by the device).

Once a device has been selected the output format can be chosen from the drop down list. The formats with a tick next to them are supported by the chosen card and the ones with a red cross are not supported.

Genlock

There is also a drop down menu called GenLock (Generator lock) which can be used to select (if not grayed out) which device will be used for synchronisation. The options are INTERNAL, COMPOSITE and SDI. If you choose COMPOSITE, you MUST have an analog composite (back & burst) signal connected to the video cards ref-in connector. If you select SDI then Swift will lock to the video input signal. Note that in both cases, if no signal is present then the video will lock to an internally generated clock.

Output Options

Each format has a different default setting for the options shown, these will be greyed out unless a custom format is selected, in which case they can be adjusted manually to suit the user.

Under the heading control there are seven checkboxes which can be selected (as mentioned above these will be set to the default settings of the chosen video device) this may involve some of them being grayed out as they are not supported by the device.

The checkboxes which are not greyed out can be selected and unselected as required by the user.

| Parameter | Description |

| Flicker Filter | Applies a field based filter to the video out to reduce the effect of scan line aliasing |

| Unshaped Output | If checked, the fill will reflect the transparent states of graphics on the screen, and this will be reflected in the fill output. If the box is unchecked, Fill will only contain Fill information, and Key will contain all transparency information. The default is shaped output |

| Show Render Window | This will cause the render window to be displayed (or not) during Live mode |

| Horizontal Flip | |

| Field Swap | This will swap the field orientation when running interlaced video. This may be necessary in VR if you are keying over another source and the keyer cannot handle an odd field delay. |

| Lens Distortion | Enables Lens Distortion when Swift is rendering through a VR tracking system. |

| Colour Bars | Displays colour bars through the SDI output. |

| Watchdog | |

| HSync (pixels) | Alter the horizontal genlock synchronization of the SDI video feed. |

| VSync (pixels) | Alter the vertical genlock synchronization of the SDI video feed. |

| Out Delay | Alter the output delay on the video card. |

| OverRender | When rendering with lens distortion, the video output will be distorted causing either a barrel distortion or pincushion distortion effect. Without overrender, gaps appear around the edge of the screen where there are no pixels to fill the distorted area. Overrender fills in the gaps left by this effect. The exact value to use varies based on the amount of lens distortion being applied by the lens that you are using. The value is specified as a percentage of the entire screen size. 10 is a good starting point. |

Input

This sets up the Video input for Swift. Usually this does not have to be selected since the system will automatically select the input based on your output setting. The only excep- tion to this is when you use the nVidia SDI card. In this case you need to setup the video in card separately

Enabling the keyer will cause the keyer on-board the video card to be enabled. This au- tomatically keys the graphics onto the incoming video input. NOTE: Do not use this key- er if you are reading in and using the internal chroma keyer.

As you choose each device certain options will automatically be selected (these are the default values for the device) and some will be grayed out (these are not supported by the device).

Once a device has been selected the output format can be chosen from the drop down list. The formats with a tick next to them are supported by the chosen card and the ones with a red cross are not supported.

Timecode Source

Choose the timecode source, used with the Sony 9 Pin Plugin.

- SDI – get timecode embedded into the SDI feed.

- Serial – get data embedded into the serial feed.

Timecode Type

Choose the type of timecode to use, when multiple are available.

- VITC – use VITC timecode

- LTC – use LTC timecode.

Output Keying

Choose the keying type used for each input channel.

| Key Type | Description |

| None | Swift does not key the video, only Swift graphics will be output. |

| Chroma | Use the Swift HSV chroma keyer |

| Segment | Use the Swift segment keyer |

| Blue Matte Keyer | For use with Blue screen environments |

| Green Matte Keyer | For use with Green screen environments |

| Red Matte Keyer | For completeness, a red matte keyer is included. |

| Software Linear | Swift graphics linearly keyed over the top of the video input. |

| LAB Keyer | Use the Swift LAB chroma Keyer. LAB is an alternative colour space to HSV. |

| Segment L Lab | Use the segment keyer using LAB rather than RGB colour space. |

Display Screen Resolution

If a video output is selected, this will determine the Run Width and Height. For all cards except Custom these values are fixed. In custom mode, the user can specify the Run Width and Height and aspect ratio.

| Parameter | Description |

| Run Width | The width of the output window |

| Run Height | The height of the output window |

| Aspect Ratio | The ratio of width to height of the run window |

| Edit Width | The width of the edit window, within the scene editor window |

| Edit Height | The height of the edit window, within the scene editor window |

| Playout Size % | This determines the size of the preview screen in Playout mode as a percentage of the render size. If the user is running HD then this should be at least 50% |

Second SDI card

In addition to the options available on the preference panel, you can also configure a second NVIDIA SDI Output board, allowing Swift to output multiple SDI outputs. When used in conjunction with Layer Routing (see layer nodes), you can control which graphics are displayed on each output.

In order to use this configuration, you need a machine with the following cards :

- 2 NVIDIA Quaddro 5000 or K5000 cards.

- 2 NVIDIA SDI Output cards compatible with the Quaddro cards that you are using.

- This configuration generates 2 Fill, 2 Key outputs.

- The outputs appear as follows on the two cards.

| Card “Fill” Output | |

| First SDI Output Board | SDI 1 Fill |

| Second SDI Output Board | SDI 2 Fill |

To enable the second output board, add –sdi2 to the command line when running up Swift.

Ultimatte Alpha Muxing

The ultimatte keyer can take 2 Fill/Key inputs. However, the key channels need to be combined and fed into the keyer on the same SDI cable.

To enable this mode of operation, as well as specifying –sdi2, specify –mux

The following outputs will be generated by Swift.

| Card “Fill” Output | |

| First SDI Output Board | SDI 1 Fill |

| Second SDI Output Board | SDI 1/2 Muxed Key |

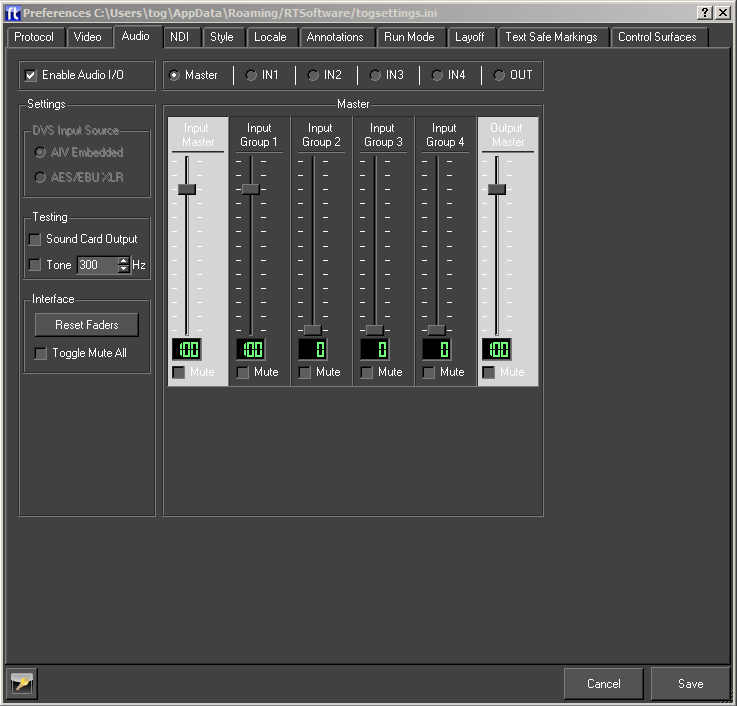

Audio Tab

This option will load the OpenAL sound manager when Swift restarts. With this you can play out .wav files or output sound from mpeg streams. Please ensure no other device is using the sound device when you select this option.

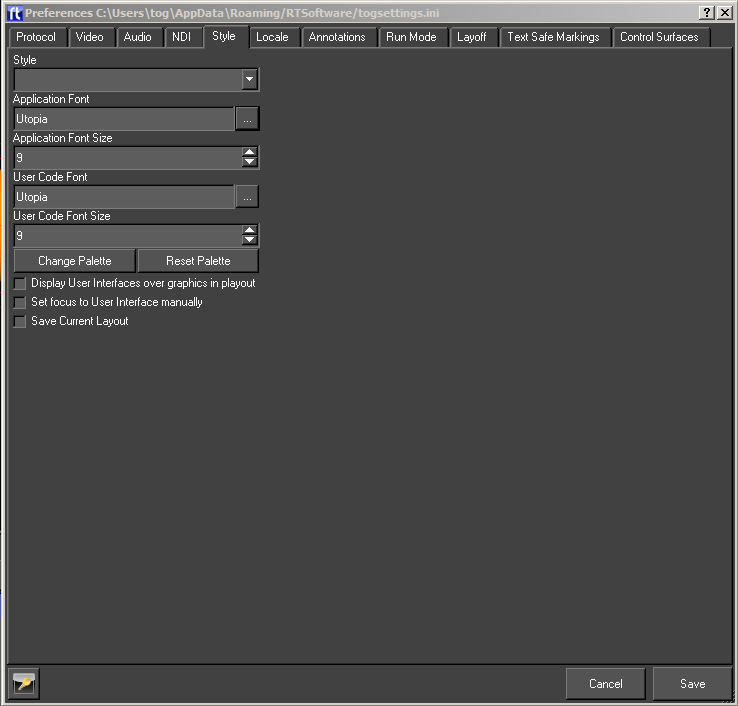

Style Tab

This is used to customise the interface of Swift so it suits the user.

| Parameter | Description |

| Style | These are preset styles which can be selected from the drop down menu and affect the appearance of the interface. |

| Application Font | The font for all text on the interface |

| Application Size | The font size for all text on the interface. |

| User Code Font | The font for the user code editor. |

| User Code Size | The font size for the user code editor. |

| Display User Interfaces over Graphics in Playout | When in Playout mode, the user interface is added to the interface not under the stack but over the graphics icon view. |

| Save Current Layout | This saves the current layout of all the windows within the editor. |

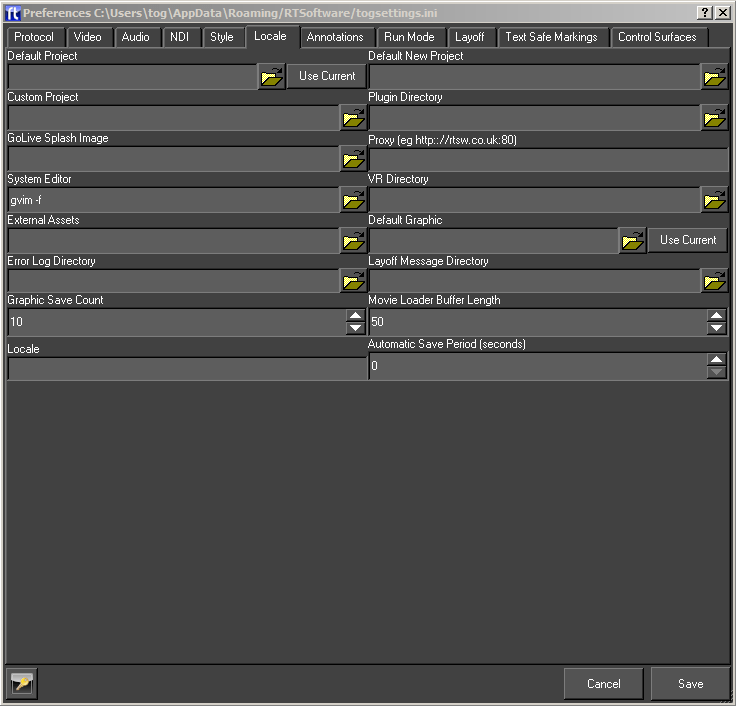

Locale Tab

The locale tab contains settings that relate to how and what Swift loads on startup.

| Option | Description |

| Default Project | If Swift is loaded up and no project is included in the command arguments then the project specified here is loaded. If this is blank then nothing is loaded on startup (unless included in command line arguments). |

| Default New Project | New projects are created in this directory. |

| Custom Project | Allows a custom project to be specified. Custom projects load in parallel with a normal project (called the base project when running with a custom project). Assets in the custom project are used in preferences to the same assets in the base project. |

| Plugin Directory | This is the directory which contains any plugins intended to be used across more than one project. Plugins in a project’s Plugins directory can only be used within that project and are unloaded when the project is closed. |

| GoLive Splash Image | The image that will be shown on the load up of Swift Live mode, the splash screen will stay covering the output window until a script is loaded. |

| Proxy | If Swift is accessing data across a firewall (e.g. Streaming a movie from the internet into a texture), this is where the proxy is specified to enable http tunneling. |

| System Editor | Whenever a considerable amount of text needs editing (e.g. User code or the SQL statement of a database input), the text can be popped up in this editor. |

| VR Directory | This directory is external to any project and contains the VR setup files. |

| Import Directory | This directory is periodically checked for new files to import into the current project. |

| Default Graphic | This graphic is loaded when Swift is started. |

| External Assets | This directory contains external assets that will be loaded when a project is loaded. |

| Automatic Save Period | If this is non-zero, Swift saves the current project, graphic and shaders periodically at the interval. |

| Graphic Save Count | The maximum number of graphic backups maintained by Swift. |

| Layoff Message Directory | MOS messages are gathered into sequences starting with commands to play methods whose name ends with On and ending with commands to play methods whose name ends with Off. These sequences are then saved to this directory. These messages are used to automatically layoff graphics to disk. |

| Locale | Specifies the Locale i.e. the geographic location. This is used to determine local differences to time and language. |

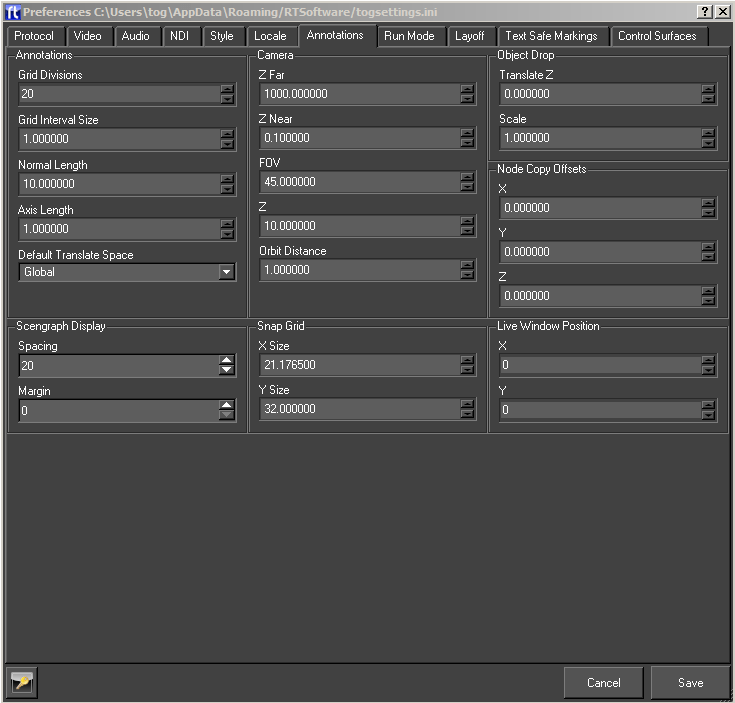

Annotations Tab

Annotations are graphical elements that appear on screen whilst editing to provide information or control handles for manipulation.

This tab also contains options that control how new cameras and objects are added to the scene by default.

| Option | Description |

| Axis Length | The length of the axis in the window |

| Grid Interval Size | Distance between each grid line |

| Grid Divisions | Number of divisions in the grid |

| Normal Length | The normals of normals when turned on in edit mode. |

| Default Translate Space | The normals of normals when turned on in edit mode. |

| Camera Z Near | Sets the default near clip plane of the camera. |

| Camera Z Far | Sets the default far clip plane for the camera. |

| Camera FOV | Use this to set the default field of view for any camera which is dropped into the scene. |

| Camera Z | This is the same as above but only for cameras dropped into the scene. |

| Camera Orbit Distance | The distance along the viewpoint of the global camera about which the global camera revolves when orbiting |

| Scenegraph Margins | The margins on the scenegraph editor around the scenegraph tree |

| Scenegraph Spacing | The distance in pixels between each item in the scenegraph editor. |

| Object Translate Z | The default Z world coordinates of objects (not cameras, see below) which are dropped in the scene, the objects X and Y coordinates will come from the mouse position at the time they are dropped. |

| Object Scale | Used the initialise the scale on Transform nodes when objects are created. |

| Snap Grid X Size | The x interval in the snap to grid. |

| Snap Grid Y Size | The y interval in the snap to grid |

| Live Window Position X | The x position of the render window when Swift is run in Live mode. |

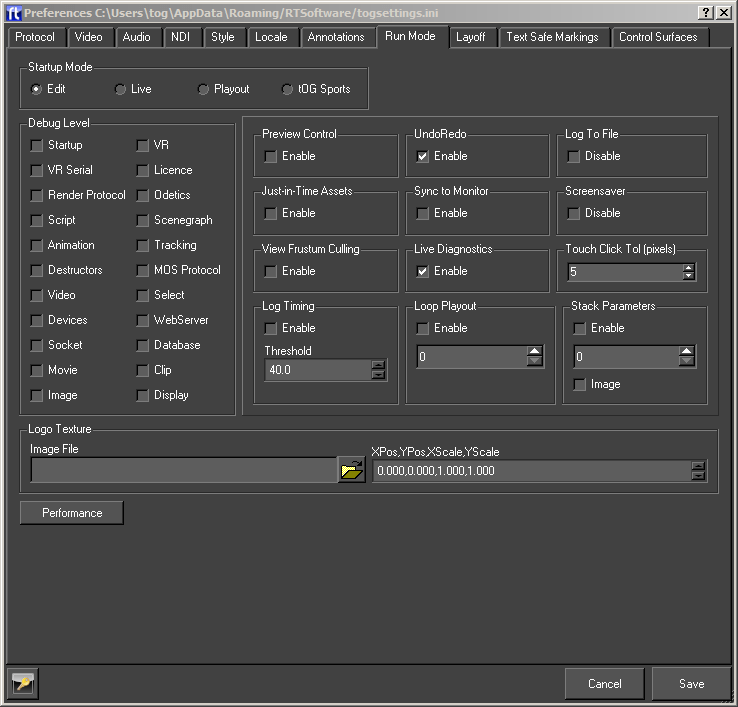

Run Mode Tab

This tab lets you set the mode that Swift starts in, some run time options, and allows you to turn various debug options on and off.

Startup Mode

This sets the mode Swift will be in when next started up.

| Run Mode | Description |

| Edit | The Swift CG+ interface |

| Playout | The playout interface |

| Live | A “black box” render window with no GUI, suitable for remote control |

| Sports | Swift Sports |

Debug Level

Swift can give very detailed output about several aspects of its operation. You may be asked to turn these on by support when fault checking.

NOTE: Debug levels should not be left checked on a live machine unless you are explicitly told to by RTSoftware support, as doing so can cause performance issues.

However, the following debug levels can be useful while building and fault checking

| Debug Level | Description |

| MOS Protocol | Whenever you use MOS the message sent and received are printed outputting Timing – This turns on a screen display showing the timings of various parts of Swift render. You can use this to determine if you are running at frame rate. |

| Database | Logs all database queries that are made by Swift. This can be useful when fault checking why a database query is not working correctly, as you can see the query after all value interpolation has been applied. |

| VR | Logs the computed camera position that Swift is using when working with a VR system. This is useful for checking that the values are what you expect them to be. |

| VR Serial | Logs the raw serial data that comes from a VR system. This is represented in hexadecimal. If you understand the raw serial data feed, this can be used to check that the data being received from the VR system is correct. |

| WebServer | Logs connections and requests to and from the built in web server. This is useful when building and fault checking web applications on top of Swift |

Preview Control

Swift preferences can be saved into two files, the default file if this option is not selected, and a preview file if this option is selected. This allows two configurations on the same machine. The preview configuration can be designed so as to reduce the render time for each frame (eg. select a small render window size). So as well as running a Swift in Playout mode, a Swift in Preview mode can be run at the same time on the same machine. When Swift is run in Preview mode, only certain frames are displayed (e.g. at the end of blocks), which give a preview of graphics to come. The two Swifts communicate and the Preview Swift is driven by the Playout one. This allows an operator to see what graphic is next on line.

Undo/Redo

Turns on and off the undo/redo manager. The undo/redo manager.

Loop Playout

To play through a stack of graphics in Playout mode, the user must press Take continually. If this is selected, Swift automatically plays each graphic in turn, pausing for Delay seconds between each one and restarting when the end of the stack is reached.

Just-in-Time Assets

Assets (e.g. Shaders, fonts) are loaded in two stages. Usually all the assets are read off disk and converted to a form suitable for use with graphic cards. If this is selected then only the first stage is done when the project is loaded. Second stage is only done when the asset is needed. Fonts should be saved out as individual meshes – this is an option in the Text Node editor.

Log To File

By default, Swift logs diagnostic information to a file, as well as printing it to the command window. This option allows you to disable the writing of log files.

Only check this if you are told to by RT Software support, as without log files, any issues with your system cannot be diagnosed as quickly.

Log files are written to the following places:

| Operating System | Log Directory |

| Window | C:/Program Files/Swift/log |

| Linux | /var/log/Swift |

Live Diagnostics

Enabling live diagnostics adds some additional diagnostic information to the live mode of Swift. Rather than just being a render window, additional information is provided to show the running state of the machine.

View Fustrum Culling

Enable view frustum culling of the scenegraph. If this is not checked, then view fustrum culling will not be applied, even if it is checked on individual nodes in the scenegraph.

ScreenSaver

Allows Swift to disable the screensaver on the operating system.

Touch

This setting affects how Swift processes gestures that come from a touch screen.

| Parameter | Description |

| Click Tolerance(pixels) | Changes how far a touch has to move (in pixels) before it is recognised as a drag gesture rather than a click gesture. For small touch screens this value can normally be left small, |

Stack Parameters

In playout, the stack can display parameters next to the graphic or method.

| Parameter | Description |

| Enabled | Check this to make parameters visible in the playout stack |

| Num Parameters | Set the maximum number of parameters that will be displayed next to each graphic or method in the stack. |

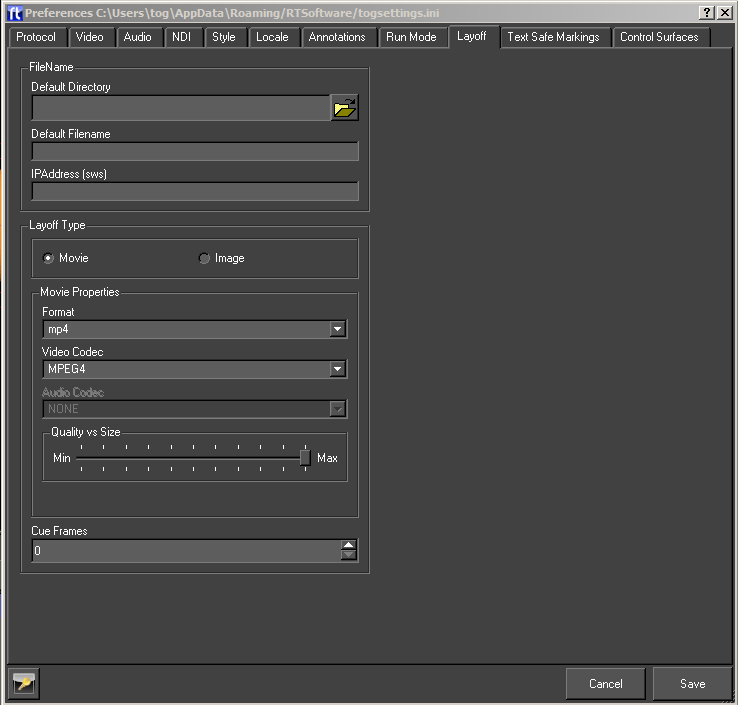

Layoff Tab

The user cas specify how Swift will layoff graphics to disk here. See the playout documentation for details.

NOTE: Files can be laid off in Swift CG+ using the timeline editor layoff method tool.

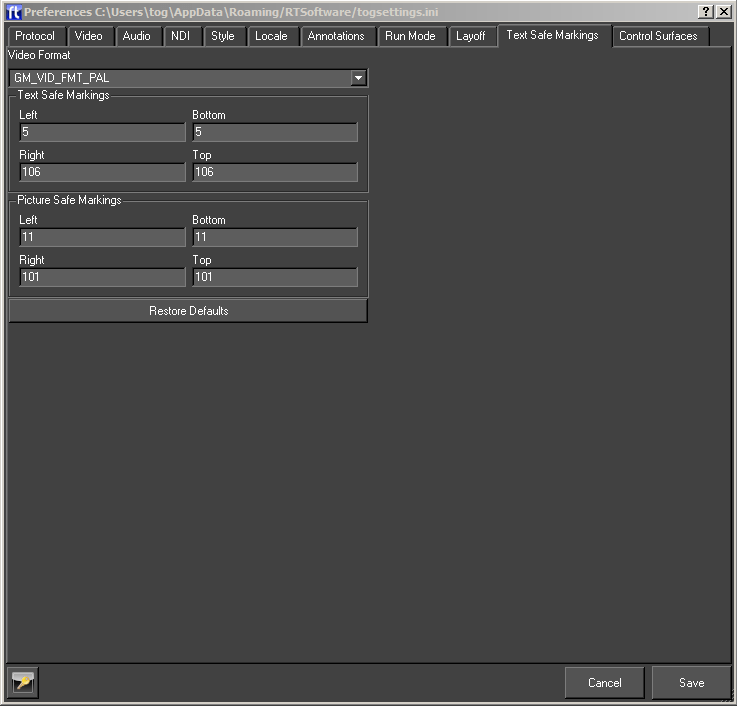

Text Safe Markings

Swift displays the text safe area on the screen when in Edit mode. These text safe ar- eas are set up here. Swift has its own values for safe areas for various formats but these areas can vary from geographic area to geographic and from broadcaster to broadcaster.

| Option | Description |

| Video Format | The format for which to specify the safe areas. |

| Text Safe Area | The rectangle within which text will not be clipped off for the given video format. |

| Picture Safe Area | The rectangle within which picture will not be clipped off for the given video format. |

| Restore Defaults | Reset the safe areas to Swift’s own values. |

Graphic Menu

Overview

A full description of Swift Graphics and how they are stored can be found in the chapter User APIs (page )

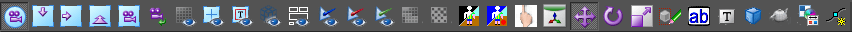

The graphic options are accessible via the Graphic menu or via this toolbar:

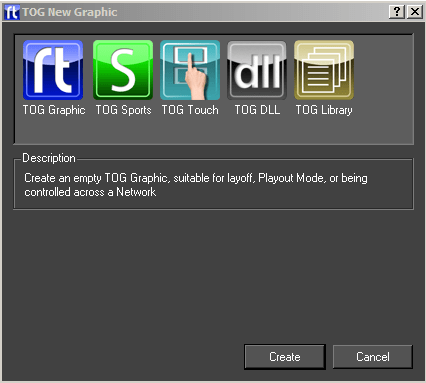

New

Create a new graphic, library object or Swift sports graphic within the currently loaded project.

Double click on the required item.

Open

The Open dialog presents a list of graphics in the current project. If an icon has been generated for the graphic it will be displayed, otherwise the Swift generic graphic icon will be displayed.

Double click on the icon or select the icon and click on Load Graphic to open the graphic.

Save

Save the currently open graphic.

Save As

Save the currently open graphic under a new filename.

Edit

Opens the Swift CG+ Editor. This allows for certain graphic-specific properties to be changed.

Includes

The user can manage the ruby modules used by the graphic. Ruby modules extend the functionality of the language.

Custom Transfer Method Name

Not available.

Reorder Object Nodes

Reorder the object nodes in a graphic after transfer to match the order in the graphic..

Swift Sports

These only apply to Swift Sports graphics – refer to the Swift Sports Manual.

Recently Opened

Display a list of recently opened graphics. Selecting an item from the list will have the same effect as browsing for and opening a project via the Graphic -> Open menu option.

Reload

Reload the current graphic from disk. All changes to the graphic will be lost. Changes to assets will be unaffected as they are saved independently from the graphic.

Load last undo

Loads the last undo from disk, this is used in unlikely event of Swift crashing. The user can then restore the graphic to the state before the crash.

Generate interface

Creates an interface that can be used to specify all the graphic inputs.

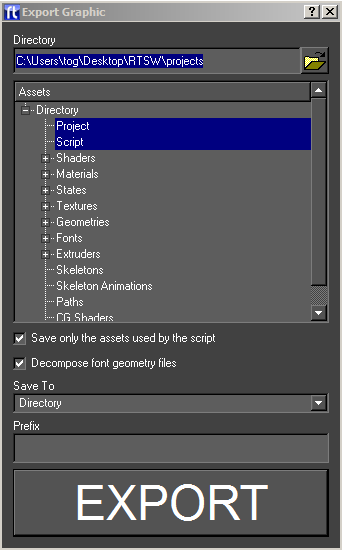

Export

Exports a graphics and all the assets used in it to the specified directory.

Directory

The assets and script will be saved to this directory.

Assets

The types of assets to export. If Project is selected, a project file will be created in the export directory which references that directory. This effectively exports the graphics into its own project. This will also cause all assets to be renamed (by prefixing their names with the export directory) making them unique (so this script and its assets can be imported safely into another.

Options

Further options:

- Zip – create a zip file from the export directory.

- In Use – only export assets that are used by the script otherwise export ALL assets.

Revert

Each time Swift saves a graphic it also saves a version of the graphic to the backup graphic directory (GMGraphicBackups inside the project directory) with a timestamp added to the filename. Choosing the Revert option will cause Swift to load the previously saved version of the graphic and overwrite the current graphic with the previous version.

Close

Close the currently open graphic.

Tools Menu

Overview

Tools provides a number of operations that do not fit under other categories. Tools are an important part of an efficient workflow inside of Swift.

The tools options in Swift are accessible via the Tools menu or via the toolbar.

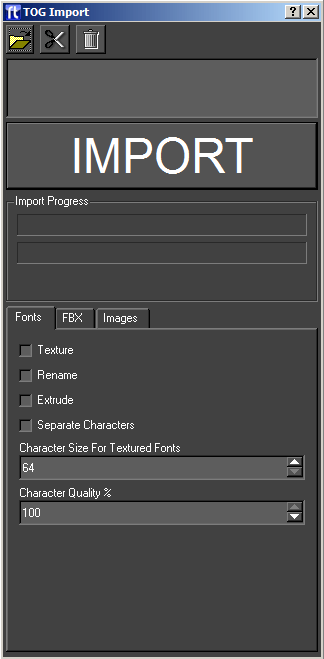

Import

The import options are accessed from the Tools menu. Import is used to import external assets into Swift.

The user selects a list of files using the file chooser. Files can be removed and the list cleared. The user then just presses the import button to import all the files.

| Option | Description |

| Add | Opens a file browser to add new entries to the import list |

| Cut | Removes the selected entries from the import list |

| Clear | Clears the import list |

| Import List | A list of all of the files that will be imported. |

| IMPORT | Begin Importing the files in the import list |

| Import Progress | Shows progress of the import operation. Some import operations can take several minutes. |

| Fonts | Font specific parameters |

| FBX | FBX specific parameters. |

| Images | Image specific parameters. |

Importable Assets

The following assets can be imported into Swift

| Asset type | Description |

| Swift assets (.geo, .sha, .mat, .sta,.txt, .pth, .lns, .fnt, .dso) | Existing Swift-created assets, normally exported from an existing Swift project.

Importing a file from a different project will copy it to the correct place in the project. Note:In order to import a shader correctly, the user should make sure that the material, textures and state that the shader uses are imported first; otherwise they will not be available in the project and the shader will be incomplete. |

| Maya .OBJ files | Maya .OBJ files can be generated in Maya and several other 3d modelers. They contain mesh data, but do not support shaders, materials or textures.

Maya .OBJ files have no special options for loading, when imported they are converted into Geometries and copied into the Geometry directory. Recommendation: Use FBX files instead wherever possible. |

| Fonts (.ttf) | Swift can import true type fonts. When imported the fonts are converted into Swift’s internal format, for speed of loading and rendering. |

| Images (.jpg, .png, .tif, .tga, etc) | Swift can import a wide range of image formats. |

| Movies (.mov, .avi, .mp4, .flv, etc) | Swift can import a wide range of movie formats.

Note: Some movie codecs are better for use in Swift than others. |

| User Interface files (.ui) | These files are copied into the GMScript/Template directory of the project. They are created using the Qt3 Designer application. |

| Swift Scripts (.rb) | This is the Swift Graphic itself – it is copied into the GMScript/Template directory. |

| CGFX Shader Programs (.CGFX) | CG Shaders allow complex graphics effects to be used in a graphic. They are copied to the appropriate directory. |

| HDR files | These files are High-dynamic-range images that are used by some CGFX shaders. They are copied to the appropriate directory. |

Importing ZIP files

If you import a zip file into the import dialog, the entire contents of the zip file will be selected to be imported. This is very useful in combination with the 5.11 option to quickly export a graphic and all its assets from one project and import it into another.

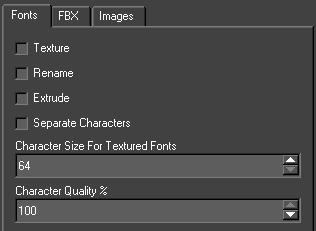

Importing Fonts

Fonts can be imported as Geometric Text, or Textured Text. Geometric text models each character of the text as a 3D model, which produces very smooth anti-aliased text on output that can be scaled to any size without quality loss. Textured text displays text as a series of images. This has the advantage that the images can be manipulated in an image manipulation program such as Photoshop to create effects that could not be achieved with simple text. In most cases geometric text is used.

The import dialog has a font specific tab, that contains options for importing fonts.

Texture

Import as textured text, rather than geometric text.

Rename

If checked, allows the user to rename a font to something different from what the TrueType font suggests that it is.

Overwrite

Overwrite fonts already imported into Swift without asking for confirmation on each font. This is useful if the user is importing a number of fonts and want to automatically overwrite fonts without being prompted each time. For general usage, leave Textured and Extruded checkboxes unchecked.

Extrude

For geometric text, selecting this will cause the characters to be extruded into 3D.

Importing FBX

Fbx is a file interchange format used for importing and exporting 3D models, shaders and animations from various 3D animation packages. Fbx is supported by all the major 3d software developers including:

- Autodesk Maya

- Autodesk 3DS Max

- Cinema 4D

- Softimage

- Newtek Lightwave 3D

Make sure that the latest version of the Fbx plugin is installed in the 3D animation software. For a more detailed description of FBX workflow usage for Swift, refer to the training and tutorials manuals.

Swift supports the following FBX features for importing geometry.

- 3D Meshes

- Nurbs (There is no control over the import; they are converted into 3D Meshes as they enter Swift)

- Material colours and Textures:

- Textures only in the diffuse channel

- Multi sub object materials are supported

- Recursive materials are not supported (multi materials within multi materials)

- Cameras

- Cameras can be targeted or free

- Lights

- Omni lights

- Spot Lights

- Directional Lights

- Animations:

- On the mesh, lights and camera

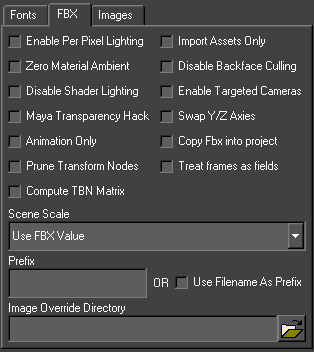

FBX Import Options

To import an Fbx file, create or load a graphic, and then go to the import dialog and add the fbx file to the list of files to import.

| Option | Description |

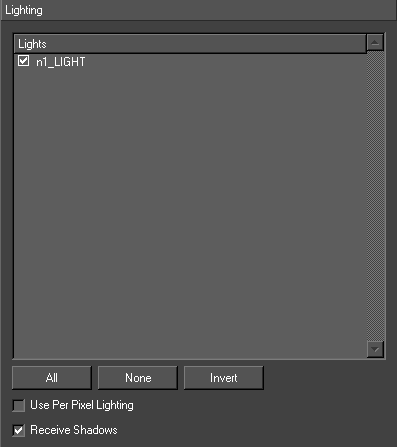

| Enable Per Pixel Lighting | Enables per pixel lighting on imported geometry as default. |

| Zero Material Ambient | Some 3D modelers such as 3D Studio Max use diffuse and ambient in a way that causes objects to import into Swift with too much ambient lighting, causing the objects to look bright and flat. Checking this checkbox will cause Swift to zero the ambient levels in all imported materials, improving the overall lighting of the scene in most cases. |

| Disable Shader Lighting | Instructs the FBX importer to ignore lighting on import, and to disable lighting on all generated shaders. This is useful if the model that is being imported has lighting baked into the textures. |

| Maya Transparency Hack | Maya reports a transparency colour that Swift converts to a grayscale for use as a transparency scale. |

| Animation only | Overwrites all animation channels |

| Import Assets Only | If this option is selected, Geometries, Materials, Textures, Shaders and States will be imported from the FBX file, but the Scenegraph structure will not be recreated. |

| Disable Back Face Culling | By default, Swift back-face culls (does not draw faces turned away from the camera) all objects for performance reasons. Unfortunately, this distinction is not always as easily recognizable inside of 3d design packages, causing objects to appear with faces the wrong way round, causing holes to appear. Enabling this option will turn off backface culling.

WARNING : Turning off back face culling should only be done as a last resort, as it can impact the time it takes for the scene to render. Whenever possible, let the artist know that faces are the wrong way round so that the problem can be fixed in the original 3D model. |

| Enable Targeted Cameras | Enables the targeting of imported cameras via linking a null node within the scenegraph. |

| Swap Y/Z Axis | By default, some 3D packages will export FBX files with the Y/Z axes swapped compared to how Swift uses them. This will instruct the fbx importer to attempt to swap the axes back around. Note that this may not work as desired, especially if there are complex rotate animations in affect. If in doubt, leave unchecked and fix the rotation issue by either rotating the model in the 3D application, or by applying an additional transform inside of Swift. |

| Scene Scale | Allows the user to input a scale value for imported objects, or reset generic units to cm, m, ft and ins etc. |

| Prefix | A common problem when importing multiple 3D objects into the same project is the issue of dealing with materials and objects with conflicting names. This option prefixes a string to the beginning of all material, geometry, shader and texture names to guarantee that they are unique. For example, if the prefix string is set to ‘car_’ and a material is called ‘default01’, the resultant material will be saved out as ‘car1_default01’ |

| Image Override Directory | Some FBX files do not have the textures embedded into the file, but are provided separately in an images directory. The texture override directory contains the relevant textures. |

Importing Images

Swift supports a large number of image types, including .jpg, .gif, .tga, .tif, .png, .bmp, and .sgi.

We recommend .png files for images, because

- all major image manipulation programs support it.

- images are defined as 24 bit and can have an 8 bit alpha channel

- images are saved with lossless compression, so the image remains intact.

- they are, in our experience, the least problematic image format to use.

Importing an image into a project in Swift automatically copies it to the correct part of the project directory structure.

Swift has support for various video formats. Swift uses ffmpeg (ffmpeg homepage) in order to read these formats, and supports most of the formats that ffmpeg supports.

For broadcast use, we recommend that MPEG 4 encoded files with a bit rate of at least 5000Kbit/s are used.

When movies are imported into Swift, they are copied into the ~GMData/Images directory associated with that project.

| Option | Description |

| Create Shader for each Image or TMV | Automatically creates a shader for each imported image or sequence |

| Shader Prefix | Appends a prefix for easy scenegraph management |

| Create TMV | Creates a TMV file from an image sequence |

TMV files

TMV files are Swift Movie Files. Typically they are generated from image sequences that often contain alpha channel information. By selecting all of the images in a sequence, Swift then creates a .tmv file that it places inside the ~GMData/Images directory. This file can be utilised in just the same way as any image or movie file for use as a texture or background.

The import dialog has an image specific tab, that contains options for importing images.

Maximum Image Sizes

Graphics hardware has limits on the size of images that can be drawn; the current limits are textures up to 4096×4096 in size. If the user has an image that is larger in width or height than this, either break it down into smaller images.

Creating Optimal Image Sequences

There are four ways to have animated textures in Swift. Firstly, there are streaming movie formats, such as MPEG. These are the preferred format for long animated sequences with no alpha channel.

There are several movie formats that support an alpha. The simplest is uncompressed mpeg. There are also very specific codecs like the Avid Meridien Codec which also support alpha. Please see the ffmpeg online documentation for codecs supported by Swift.

The user can also have a texture accept an input video from the video card as source for the texture.

Swift can also have a texture accept input from the result of a dynamic texture node. For short animations, especially when transparency is involved or where non-linear

animations are performed to the timing (for example, reversing the animation) the user can use an animated sequence of images. However, loading these into Swift is slow. In order to speed the loading time up, the user can convert image sequences into a TMV.

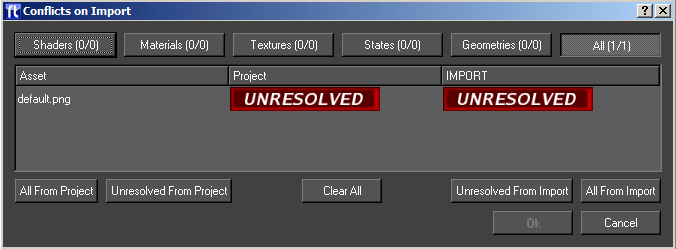

Import Conflict Resolution

When importing assets, it is possible that you will have a conflict between an existing asset and the newly imported asset. For example, if two assets share the same name.

The conflict resolution dialog allows these conflicts to be resolved.

The conflict resolution dialog shows the assets in conflict, and allows the user to individually select which assets should be imported from the FBX file, and which should be kept from the project.

Along the top of the dialog, there are buttons that filter on the various asset types. Clicking the All button displays all conflicts.

Next to each button, there is a count (for example, 5/10) which shows the number of unresolved conflicts, compared to the total conflicts in that group. In order to continue, all conflicts need to be resolved.

The main window shows each conflict in the current filter group, and shows whether they are unresolved, or resolved in favour of the FBX version, or the Project version of the asset.

Finally, there are several buttons for mass manipulation of the resolved states.

| Option | Description |

| All To Project | Resolves all assets in the given group in favour of the assets already in the project. |

| Unresolved To Project | Resolves all assets in the given group that have not already been resolved to the project. |

| Clear All | Cancels and resolutions that have been made in the current group, returning everything to being unresolved. |

| Unresolved to Fbx | Resolves all assets in the given group that have not already been resolved to the Fbx assets |

| All To Fbx | Resolves all assets in the given group in favour of the assets in the Fbx file. |

| Ok | Accepts the current resolutions, and proceeds with the import. The user is only allowed to do this once all conflicts are resolved. |

| Cancel | Cancels the import process of the FBX file; if cancelled now, the import will not happen. |

Screen Grab

The Screen Grab option will save the current frame in the editor graphics window out to disk. A file save dialog pops up to allow choosing of a file to write the image to. When Save is pressed the image will be saved out.

Generate Icon

Generates an icon for the currently loaded graphic. The icon is based on what is currently visible in the scene.

Reload Interfaces

This menu option reloads any custom user interfaces defined in the project. User interfaces are created externally to Swift and if they are edited while Swift is running they need to be reloaded.

User interfaces are created using the external program Qt3 Designer.

Data View

This interface allows the user to manage the external data being fed to Swift

The user can set up this data and then run the graphic to check the correctness of the graphics inputs.

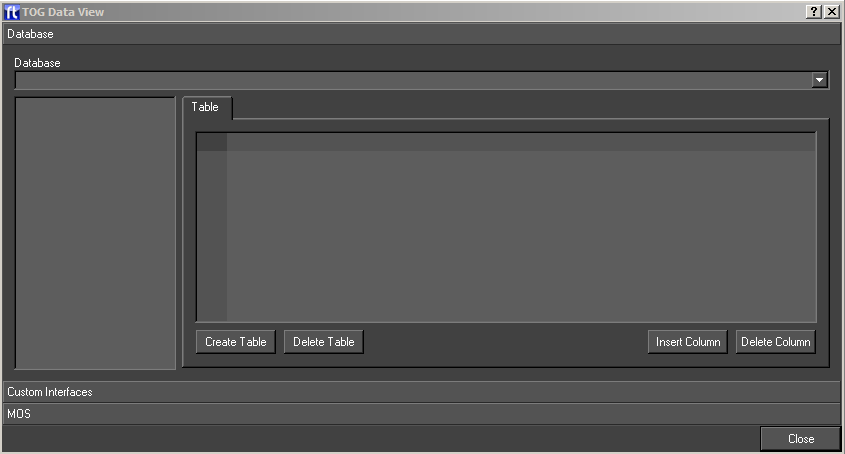

Database

Lets you browse the database connected to the Swift machine.

| Option | Description |

| Database | A list of the databases in the current project. Selecting one will fill out the list on the left side with the tables in the database. |

| Table | This page contains a table widget showing all the columns in the table filled out with the data in the table. The data is editable |

| Create Table | Create a new table in the database and add it to the table list. |

| Delete Table | Delete the selected table from the database. |

| Insert Column | Insert a column in the selected table. The user will be prompted for the column name and type. |

| Delete Column | Delete the selected column. |

MOS

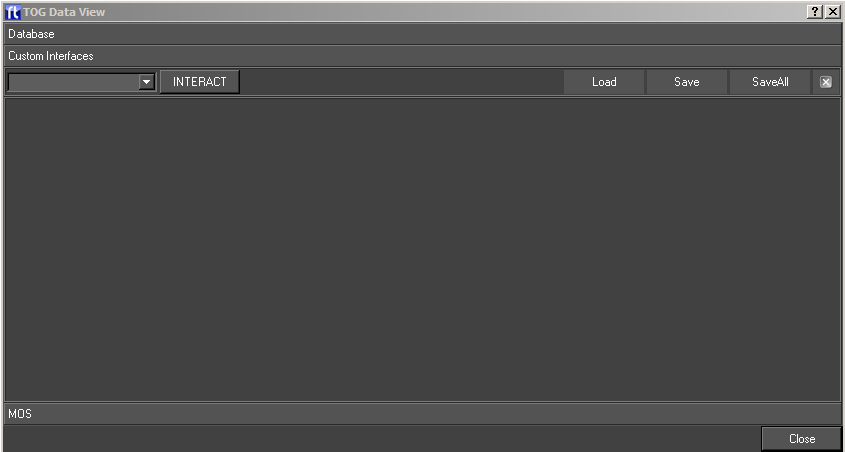

This sends MOS protocol messages to Swift. The user can create a .tcf file in the usual way and then load it into this interface. The user can then load and play graphics and methods as well as control tickers and update graphic elements directly.

Project

Script

The user selects a project file and the corresponding .tcf file is loaded and the interface is filled out with lists of graphics and their methods.

- Selects a graphic and a message is sent to Swift to load and play it..

Method

- Selects a method and fills out the parameter table.

Control

This is a set of buttons to play, abort and animate on methods. The user can also send messages to disconnect and quit from Swift and to clear the scenegraph.

Parameters

A table of method parameters. The value for each parameter can be changed.

Ticker Fields

Send the details of a slug to a ticker. The user specifies the ticker and slug nodes and the contents of the slug.

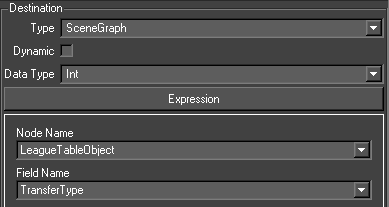

Update a scenegraph node directly. The user specified the node and field to be updated and the new value.

Communications

This shows the actual xml sent to and received back from Swift.

User Interface

This shows all the user interfaces defined for the project. The user can flip through them and enter values. These values will be used when graphics are run and widget inputs evaluated.

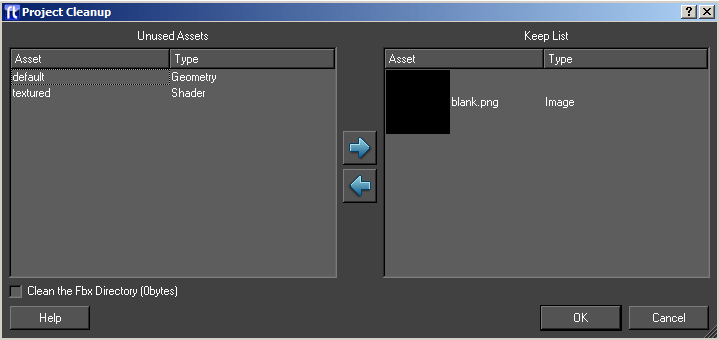

Clean Project

The clean project tool analyses your project, and lets you remove unused assets.

Why you should clean your project

As a project evolves, you will find that there will be elements that you used early on that you replace with other elements. For example, you may have been through several iterations of strap design, replacing old assets with new ones each time. These old assets may not be used anywhere in your project, but they are still loaded at startup. This has three undesirable effects :

- Your project takes longer to load on startup by loading unneeded assets.

- You project places a greater strain on system resources than it needs to.

- Your project is physically larger on disk, making it more difficult to transfer from one machine to another.

Cleaning Your Project

To access the clean project tool, load the project, and then go to the menu Tools->Clean Project.

NOTE: Take note of any errors that occur on project load, and deal with them before cleaning. If assets are failing to load, it may affect those assets that the clean project tool deems to be in use.

NOTE: Swift will ask you to save your graphic if you have one open; this is to make sure that any recent changes to the project are available to the clean up tool.

After analysing your project, the clean up tool will popup the following dialog. It contains two lists for moving items between two lists.

| List | Description |

| Unused Assets | This list contains all of the assets that have been located that the project cleanup script believes are not used in your project. |

| Keep List | This list contains assets that, whilst not used, you would like to keep in the project. |

To move assets from one list to the other, select the assets (either singly or multiple select), and click on the left/right arrows to move the assets from one list to the other.

NOTE: You should check the unused asset list before continuing.

The clean project tool will comprehensively find any assets which are statically used within your project. However, it may miss shaders, textures, images and anything else that is only referenced in your project dynamically. For example, shaders which are only assigned via an input or animator. As the graphics designer you should have an understanding of which assets are dynamically assigned, and move them to the keep list before continuing.

Press Ok to clean the project.

You will be asked if you want to permanently delete the assets in the unused assets list, or back them up. If you choose to backup the assets, you will be asked to pick a directory to store them in.

NOTE: Deleting assets is a permanent operation, and there is no way to recover them. If in doubt, choose the backup option.

NOTE: When backing up assets, it is good practice to choose a new, empty directory. This will make it easy to move assets back into the project if you later discover assets that you require.

Reloading the project

Your project will now be reloaded automatically to take into account the final list of assets. You should note any errors that occur and compare them with any errors that occurred before the clean operation. The list should be the same, or possibly reduced.

Testing your project

After cleaning your project, you should perform a full test of all graphics to verify that the removed assets have not had any undesired effects. In particular, you will want to check graphics that :

- Set geometries, shaders or textures using inputs or animators.

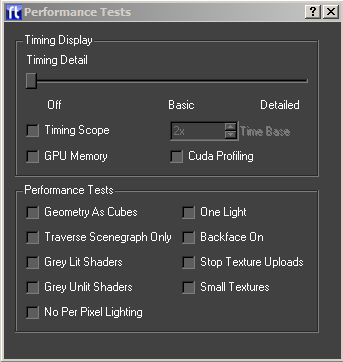

Performance Test

Provides tools for checking the performance of your graphics.

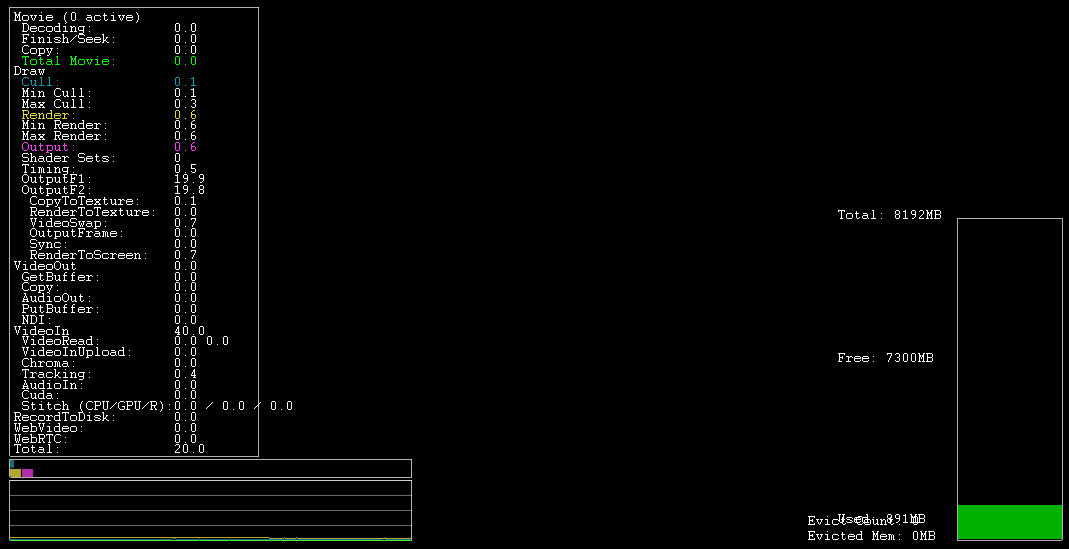

Timing Display

The timing display appears over the top of the output monitor window in Swift CG +, Swift-Playout, Swift-live and Swift-Sports. It provides detailed information on how your graphic is performing.

These options change the level of timing detail that is overlaid over the graphics.

| Option | Description |

| Timing Detail | Choose how much detail to display

|

| Timing Scope | The timing scope provides basic timing information on a line graph. |

| Time Base | Chooses the zoom level of the timing scope. |

Performance Tests

The performance tests deliberately alter the normal graphical rendering of your graphic in order to aid you in finding performance problems. For more information look at the Performance Troubleshooting Guide.

NOTE: All performance tests will be switched off when the performance test dialog is closed.

| Parameter | Description |

| Geometry As Cubes | Render all geometries as cubes. Tests for geometry complexity bottlenecks |

| Traverse Scenegraph Only | Do not render anything, but traverse the scenegraph. Tests for scenegraph complexity bottlenecks. |

| Gray Lit Shaders | Render all geometries with the same, lit shader. Tests for shader complexity bottlenecks. |

| Gray Unlit Shaders | Render all geometries with the same, unlit shader. Tests for shader complexity bottlenecks. |

| No Per Pixel Lighting | Turns off all per pixel lighting and Cg Effects. Tests for pixel shader complexity bottlenecks. |

| One Light | Prevents more than one light being active when rendering. Tests for lighting-related bottleneck issues. |

| Backface On | Turns back face culling on. Tests whether back face culling has a significant effect on performance. |

| Stop Texture Uploads | Stops dynamic textures from being uploaded to the graphics card. This includes movies and TMVs. Tests to see if there is a texture upload bottleneck |

| Small Textures | Replaces all textures with a single small texture. Tests to see if large textures are causing bottleneck issues. |

Keyer

Brings up the Keyer dialog, allowing the currently selected keyers to be set up.

NOTE: For Details on the keyer interfaces, see the Swift Sports Manual, and the VR Documentation.

VR Interface

The VR Interface allows you to set up a VR tracking system. See the VR documentation for further details.

Lens Calibration

The lens calibration dialog allows you to calibrate a lens for use with a VR tracking system. See the VR documentation for further details.

Browser Menu

The browsers are used to view assets contained within the projects. These can then be dragged and dropped onto the render window to be added or to update the scene.

State Browser

Shows a list of states in the current project. Drag a state onto an existing object in the render window to change the state on it’s shader.

Geometry Browser

Shows a list of geometries in the current project. Drag a geometry onto the render window to add the geometry to the scene.

Material Browser

Shows a list of materials in the current project. Drag a material onto an existing object in the render window to change the material.

Texture Browser

Shows a list of textures in the current project. Drag a material onto an existing object in the render window to change the material.

Shader Browser

Shows a list of shaders in the current project. Drag a shader onto an existing object in the render window to change the material.

Font Browser

Shows a list of fonts in the current project. Drag a font onto the render window to create a new Text Node with the selected font.

Image Browser

Shows a list of all images in the current project. Drag an image onto an object in the renderer window to update the image on the shader’s texture.

Paths Browser

Shows a list of paths in the current project

Custom Browser

The custom browser shows a number of “predefined” objects that can be dropped onto a scene.

| Object | Description |

| Ticker | Creates a horizontal scrolling ticker |

| Roller | Creates a vertical scrolling ticker |

| Teletype | Creates a teletype-style ticker |

| HisSwiftram | Constructs a hisSwiftram |

| Line Graph | Constructs a linegraph |

| Bar Chart | Constructs a Bar Chart |

| Pie Chart | Constructs a Pie Chart |

| Library Objects | Any Library objects in the project will appear in the custom browser window after the predefined types. |

Dynamic Geometry Browser

Shows a list of dynamic geometries in the current project. These are geometries that can be generated inside of Swift without requiring a separate editing package. See the Dynamic Geometry Node for more details on which geometries are available (page )

Geometry Effect Browser

Shows a list of Geometry Effects int the current project. These are effects that can be used with an Effect Node.

Layer Effect Browser

Shows a list of Layer Effects in the current project. These are effects that can be used with a Layer Node.

Drag Operations

Some browser types provide a drop down list of options on how the drop should be applied.

| Action | Description |

| Create a Sibling Node | Creates a subtree of the chosen type under the currently selected scenegraph node |

| Create an Object | Creates a new object node under the root node and adds the subtree of the chosen type to this object node |

| Add to Existing object (e.g. n1_OBJ) | Adds the subtree of the chosen type under the chosen object node |

| New Shader | Creates a new shader |

| Override | Overrides the selected texture image with the this image |

| Replace | Replaces the image on the selected texture channel |

| Create | Creates a new texture file (.txt) |

View Modes

Each browser has two modes. The user can switch between them using the buttons on the toolbar.

Icon View

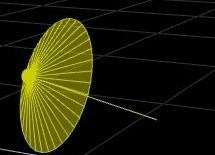

Shows an icon for each asset e.g. a geometry will show an image of the actual geometry (the case in the picture).

List View

The list view options will change depending on which browser is opened. For the state, material, texture and shader assets, this is a more detailed list of attributes contained in the asset.

Editors Menu

The editors menu lets you display one of the four asset editors available in Swift. These editors can also be accessed while looking at a Shader Node in the scenegraph.

| Editor | Description |

| Shader | Shader assets contain references to a material, state, and a number of texture assets. |

| Material | Material assets contain basic colouring information |

| Texture | Texture assets contain image references and options on how they should be applied. |

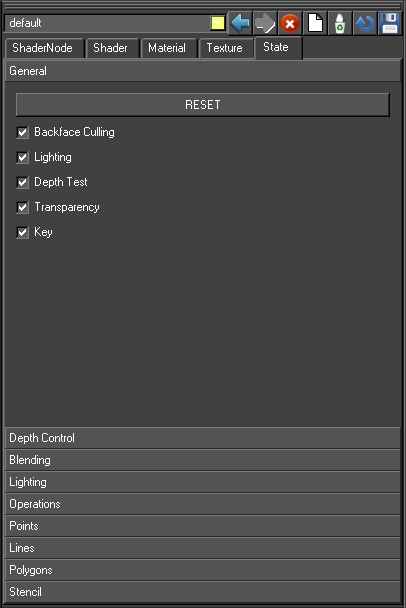

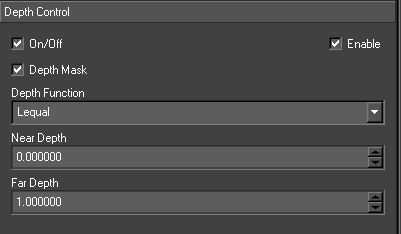

| State | State assets contain settings relating to how a geometry is rendered. |

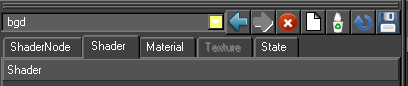

Editors

Shader, material, texture and state editing are all done from a single window with a tabbed interface to choose which item is being edited. There is a common control bar used to choose which item is being edited as well as controls for the saving and loading of items:

The contents of the drop down list will alter depending upon which tab (Shader, Material, Texture or State) is currently selected and will contain a list of all of the items of the current type.

Actions

The toolbar icons perform the following actions:

| Icon | Action | Description |

|

Cancel | Cancels any changes made since the item was displayed in the editor |

|

Create | Creates a new item of the type currently chosen |

|

Delete | Deletes the item currently being edited |

|

ReLoad | Loads the item from disk, any unsaved changes will be lost |

|

Save | Saves the item currently being edited |

Saving Changes

Changes to shaders, materials, textures and states are not automatically saved. Changes are remembered in the editor, but the Save button must be used to make the changes permanent.

When saving state, material and textures the dialog will prompt whether the user wants to save just that item or all changed items. Choosing the Save All option will save all changes to all items of that category (state, material or texture) that have changed. Saving a shader also saves its material, state and texture.

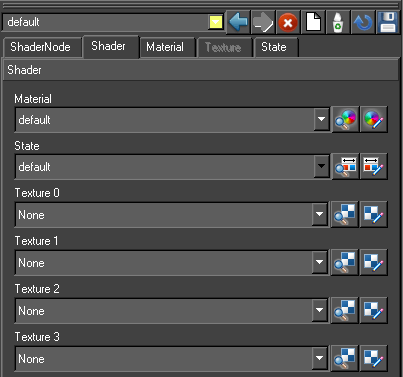

Shader Editor

A shader consists of a material, a state and textures. A single shader can be referenced by any number of shader nodes in a graphic.

NOTE: A change to a shader will affect any objects that have a shader node that references the shader. If in doubt create a new shader.

Creating Shaders

Shaders can be created in four ways.

- by using the Create Shader button by the render window

- by choosing the option to automatically create a shader with a texture when an image is imported. See the chapter, Import (page )

- by dropping an image onto an object on to the screen from the Image browser and selecting a new shader. See the chapter, Image Browse (page )

- by using the Create tool on the Shader editor.

The user is prompted for a name and this is used for the new Shader and its components.

Shader Tab

| Option | Description |

| Material | Specifies the material referenced by the shader. The selected material can be changed or edited. |

| State | Specifies the state referenced by the shader. The selected state can be changed or edited. |

| Texture 0,1,2 and 3 | Specifies the textures referenced by the shader. The selected texture can be changed or edited |

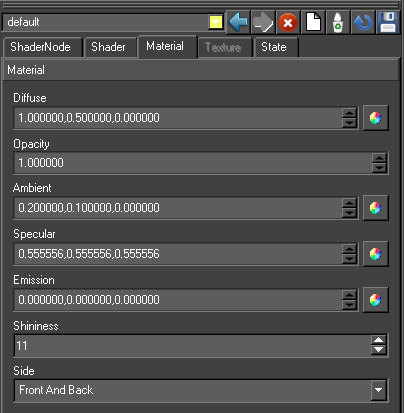

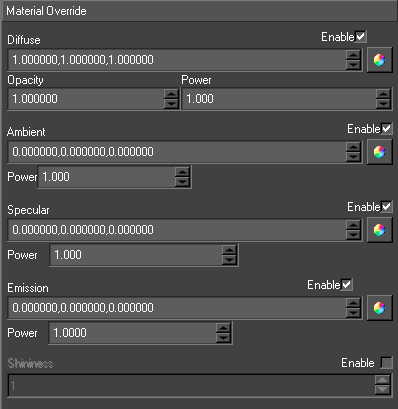

Material Tab

| Option | Description |

| Diffuse | Specifies the diffuse material colour used for lighting. The Diffuse colour is perceived as the colour of the object itself. It is the colour that the object reveals under pure white light. Type in RGB values or use the color wheel icon to select a colour. |

| Specular | Specifies the specular material colour used for lighting. Specular colour is what we most commonly think of as a highlight. The colour of a specular highlight is typically the colour of the light source itself. The highlight size is controlled in turn by the “Shininess” value. Type in RGB values or use the color wheel icon to select a colour. |

| Ambient | Specifies the ambient material colour used for lighting. Ambient light is generalized lighting not attributable to the direct rays from a specific light source. Without ambient light, objects in shadow would be completely black. Type in RGB values or use the color wheel icon to select a colour. |

| Emission | Specifies the emission material colour used for lighting. Emission works exactly like Ambient colour, and can be thought of as “self illumination”. Type in RGB values or use the color wheel icon to select a colour. |

| Opacity | Specify an explicit alpha value for an object via it’s material. Controls the degree of transparency of an object. Type in a normalised value, where 1 is fully opaque and 0 is totally transparent. |

| Shininess | Specifies the specular exponent of the surface. The Shininess controls the spread of specular highlights. Enter a higher value to achieve small, tight highlights. Enter a lower value to achieve a very shiny, burned out highlight. |

| Side | Specifies whether this material is set for the front, back or front and back of the object. |

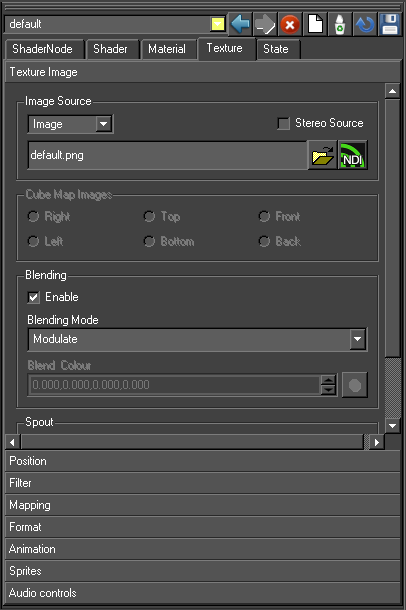

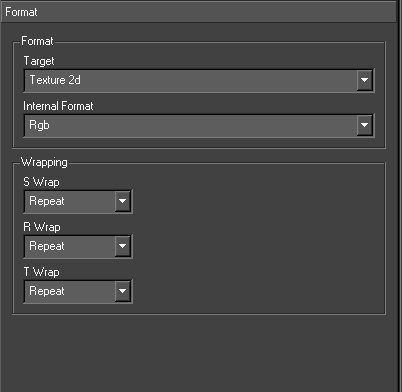

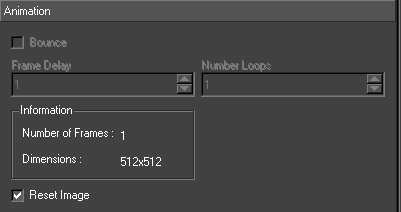

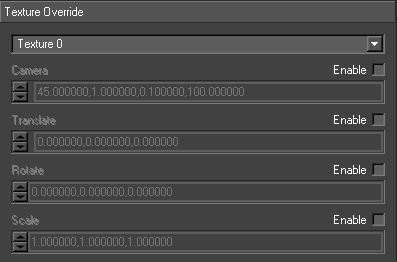

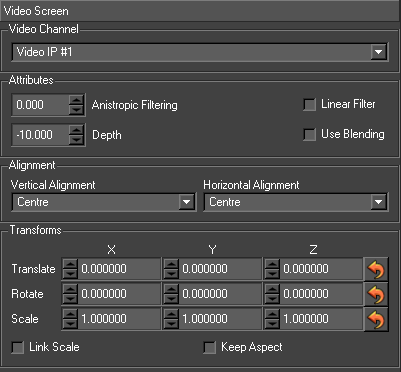

Texture Tab

This page contains all the controls for setting up a shaders texturing. It handles just one texture at a time. The texture tab has 7 sub menus, each relevant to a particular aspect of how to manipulate and apply bitmapped images and video sources to the surfaces of objects.

Texture Image

Choose the image or video source to be applied in the shader.

Image Source

Choose the image that should be used with this texture.

| Image Source | Description |

| Image | Use a disk-based image, TMV or movie file as the image source |

| Video IP #1 | Use the first video input channel on the graphics card as the image source |

| Video IP #2 | Use the second video input channel on the graphics card as the image source |

| Video IP #3 | Use the third video input channel on the graphics card as the image source |

| Video IP #4 | Use the fourth video input channel on the graphics card as the image source |

Using video input channels depend on having the correct hardware in the machine to provide them.

If you are using a stereo image, enable the stereo mode to send the correct part of the image to the correct “eye”

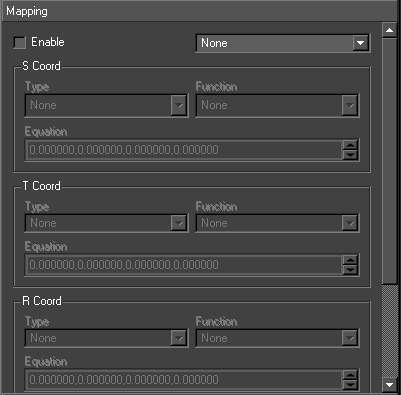

Cube Map Images

When enabled these radio buttons control which image is placed upon each face of a cube map texture. These radio buttons are only enabled if the texture format is set to cube map. When the cube map options become active (See Mapping) use the browser to locate an image file, normally in the projects /GMData/Images directory, for each of the six slots: right, left, top, bottom, front and back.

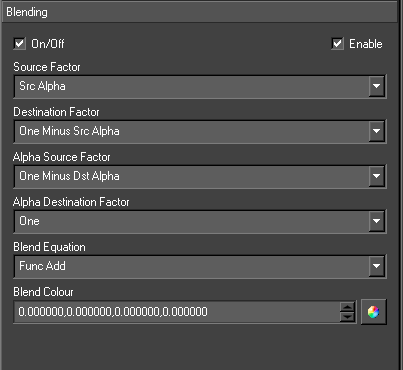

Blending

Blending determines how the image interacts with the material colour and other textures.

| Blending Type | Description |

| Modulate | multiply texture colour with material colour |

| Decal | mixes texture colour with material colour using texture colour alpha |

| Blend | blend texture colour with blend colour |

| Replace | replace material colour with texture colour |

| Add | add texture colour and material colour |

Blend Colour

Use the colour wheel icon to select a blend colour.

Sound

Enable the sound checkbox if audio is require

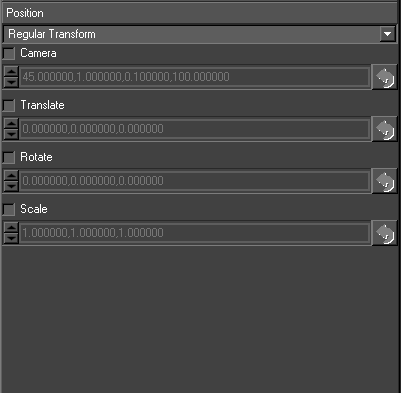

Position

Position is used to apply a transformation to the texture coordinates prior to applying the texture to the model – this moves, rotates and scales the texture on the object surface.

There are 3 options in the drop down menu:

| Option | Description |

| Regular Transform | Options are Translate, Rotate, Scale. These options allow the user to transform textures (images) on objects. |

| Bind to Camera | The texture translation is taken from a camera and the values above are overridden |

| Bind to light | The texture translation is taken from a light and the values above are overridden. |

Camera, Transform, Rotate and Scale are used as follows.

| Option | Description |

| Camera | a camera perspective transformation to apply to the texture coordinates. The four values are in order field-of-view, aspect ratio, near clip plane and far clip plane. This is used for projective texture e.g. the texture is like the film in a cinema projector which is projected onto the screen and audience. |

| Translate | a translation to the texture coordinates. |

| Rotate | a rotation to the texture coordinates (specified as x, y, z angles). |

| Scale | a scale factor to the texture coordinates |

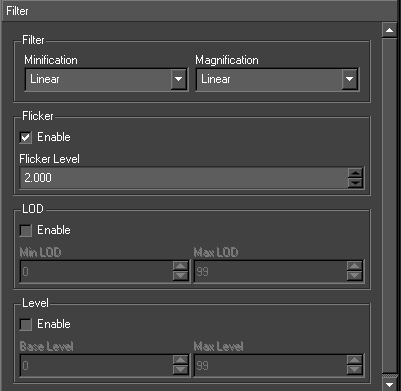

Filter

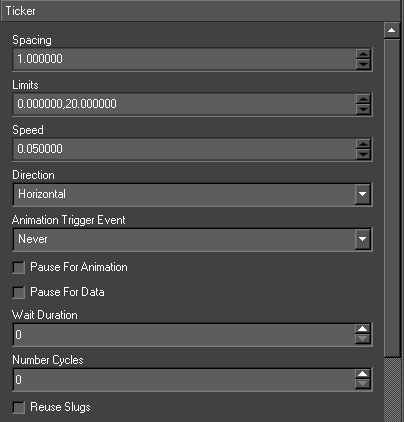

Filtering controls the appearance of the texture image when it is rendered smaller or larger than its original size. There is a performance versus quality trade off with different filtering options.